Things started to go awry near Cleveland at 3:06 p.m. on Aug. 14, more than an hour before the largest North American blackout in history. A transmission line carrying 345 kilovolts of power overheated, sagged into a tree, and automatically shut off to protect itself from melting entirely. Instantaneously, the colossal current of electricity it was carrying found a new route, sluicing into a neighboring cable. Twenty-six minutes later, the cable that picked up the misplaced load was also toast, a victim of overload.

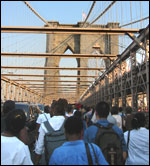

Stranded commuters

cross the Brooklyn

Bridge during the

blackout.

As the mounting flow of wayward electricity kept surging into new routes, three more major transmission lines stretching between Ontario and Pennsylvania failed. By 4:11 p.m., a 9,300-square-mile swath of the Northeastern power grid had been snuffed out. First Cleveland went black, then Toronto, Detroit, Boston, and New York City. In just over an hour, one of the greatest achievements of industrial engineering — the Eastern Interconnect — had been brought to its knees.

Simultaneously, the 50 million people living in the affected region woke up to the fact that modern life and commerce hang from one increasingly fragile thread: the 200,000 miles of high-voltage copper cable that make up the North American electricity grid. It’s not that the world’s richest superpower has an antediluvian electricity system on the verge of collapse, as some critics have suggested in the last two weeks. In fact, our electricity grid is 99.9 percent reliable, as good as any in the world. But we’ve got a problem: Electricity demand in the U.S. has grown far faster than the rate at which utilities have added new transmission wires — or invested in energy efficiency and on-site generation.

Since 1990, overall electricity demand in the U.S. has spiked a whopping 35 percent, largely because of the technology boom that has brought energy-hungry devices, from iMacs to Xboxes, into millions of households. During that same period, the total investment in upgrading the electricity grid has grown only 17 percent. What’s worse, investment in energy efficiency and distributed energy systems such as solar and fuel cells (which would feed electricity directly to the buildings where they’re installed, thereby alleviating the need to import power through transmission lines) has grown even less. And though the high-tech boom has subsided, the Department of Energy predicts that power demand will continue to grow 2 to 3 percent per year for the next few decades.

Corridors of power.

Photo: NREL.

“This is the fourth catastrophic failure of the central power grid within the last decade, and yet decision-makers are not learning the right lessons from these crises,” said Kyle Datta, managing director of the Rocky Mountain Institute’s energy consulting practice. “They invariably clamor to make the exact same system larger, bigger. But we have to look at the central design flaw of the system: it’s over-centralized, it’s over-concentrated” — and it’s a far cry from efficient.

Indeed, in responding to the blackout, President Bush said it was not only a wakeup call to expand the electricity grid, but also a good reason to approve his misbegotten energy bill. The bill contains a measure that would force utilities to comply with stricter reliability standards, which most environmentalists endorse, but they believe the standards are so urgently needed that they should be passed separately, rather than get mired down in Congress or used as an excuse to pass other controversial measures along the lines of drilling for oil and gas in the Arctic National Wildlife Refuge.

Unlike Bush, Datta and other energy experts see the 2003 blackout as a clarion call not just to expand the existing leaky-bucket network, but to accelerate research and development of a radical new energy network — one that’s adaptive, self-healing, and compatible with distributed, on-site energy sources. It would have sensors to anticipate crises, circuits to redirect wayward currents, and a computerized “brain” that would automatically power down noncritical electricity load such as dishwashers or daytime lighting when demand is peaking. (Peak demand usually occurs at midday during the summer, when businesses are humming, air conditioners are cranking, emissions are at their worst, and electricity is most costly.) This revolutionary energy grid would be both environmentally and economically smart, and would operate something like the World Wide Web.

“Today’s existing electrical grid is structured roughly the way IBM designed computing in the 1960s: large, centralized mainframes networked together in a hub-and-spoke arrangement,” said Datta. What that computer web has evolved into, of course, is a modular, decentralized system in which distributed computers are networked together in such a way that if something goes wrong with one computer, it can be isolated from the rest. Like this distributed system, the energy grid of the future will be, if all goes well, impossible to shut down.

Small Is Beautiful

For the last two decades, major utility and technology companies worldwide have been pouring billions of dollars into the research and development of just such a system — which is, after all, imperative for the survival of the digital era. The grid may provide 99.9 percent reliability (“three-nines reliability” in energy speak), but that 0.1 percent chance of failure is a serious problem in our warp-speed society, where even fleeting blackouts can cause massive losses to commerce. Hewlett-Packard, for instance, has estimated that a 15-minute outage at one of its chip factories would cost the company $30 million.

Renewables are in

vogue at the Conde

Nast Building.

Photo: NREL.

As the financial stakes of blackouts get higher, more and more high-tech companies, hospitals, stock exchanges, media outfits, and even hotels are investing in their own on-site generation. Indeed, during this month’s blackout, it was the distributed-generation components of the grid that saved the day, providing ongoing, reliable power to the life-support systems in New York City’s Mt. Sinai Hospital, to the trading floor of the New York Stock Exchange, to the newspaper presses and production facilities of news networks. Granted, the majority of these emergency-generation systems are powered by dirty diesel engines that only have enough fuel to last for a few days — but an increasing number are clean and long-lasting, like the fuel cells and solar panels on the Conde Nast Building in Times Square, or the natural-gas-powered microturbines in the nearby Reuters Building. Whereas it takes days to bring big central power plants back online after a disturbance, the small-scale systems rev up instantaneously.

In the long term, these flexible, responsive, sustainable systems could be built into every home, factory, and office building in the nation. But that kind of wholesale transformation will take many decades, and it will not obviate the need for an electricity grid: Distributed systems will need to be networked together so they can pump electricity back into the grid when they produce a surplus and draw from the grid when necessary (in the case of solar systems, at night or on cloudy days). Thus the first lesson to be learned from the blackout is that we must strengthen the rules that govern the operation of the current grid and the system for enforcing them.

“Aug. 14 was a hot day and air conditioners were cranking, but the grid was not under any record-breaking stress. The fact that it crashed tells us that in all likelihood someone was simply violating reliability rules,” said Ralph Cavanaugh, codirector of the Natural Resources Defense Council’s energy program and former advisor to the energy secretary during the Clinton administration.

Charge!

Photo: NREL.

One of the likely violators was Ohio’s FirstEnergy Corp. (a major contributor to the Bush campaign, as it happens), which allowed that first transmission line in Cleveland to overload. Reliability rules dictate that utilities are never supposed to run their lines at full capacity, so that in case one goes down, there will still be other routes that can carry the power. But if a utility is not running its system at full capacity, it’s sacrificing volume of sales and revenue, which means there is a constant incentive to cheat the system.

To make matters worse, reliability rules are not really rules; they’re voluntary measures that are not federally mandated. They were formulated in the late 1960s by the North American Electric Reliability Council (NERC), which was founded in the wake of the New York City blackout of 1965. At the time, the industry was dominated by a small handful of transmission owners that could easily be held accountable for problems, and the guidelines worked reasonably well for several decades. But ever since the electricity grid was deregulated in the early 1990s, the use and control of it has splintered off into too many different energy companies for all of them to be monitored closely. Since 1998, NERC has been clamoring to get its voluntary measures passed by Congress.

“Those mandatory rules have been sitting in Congress for years, buried deep in pork-barrel energy bills,” said Cavanaugh. “It’s time to free these hostages from the [stalled-out] bill and pass them separately. If we are going to take measures to avoid future energy crises, finding a way to pass reliability laws would be at the top of my list.”

Cavanaugh also recommends legislation to encourage energy efficiency: “There is no doubt that grids under stress crash more readily than grids that are not under stress. And the fastest, cheapest, and cleanest solution to stressed power grids is more efficient electricity use.” First and foremost, we need to reverse the rollback of air-conditioner efficiency standards that the Bush administration enacted early in its term. (A challenge to the rollback is now pending in the federal court of appeals in New York City.) Meanwhile, a host of other efficiency measures designed to streamline commercial operations are languishing at the DOE. Cavanaugh cites three such efficiency standards (related to appliances such as commercial air conditioners and boilers) that together have the potential to reduce electricity use by 25,000 megawatts over the next 20 years — the equivalent of shutting down 50 giant coal-fired power plants.

The other challenge is to get utilities to again offer incentives for customers to use electricity more efficiently. (Many did so in the ’70s and ’80s, before deregulation.) It may seem counterintuitive that utilities would convince customers to buy less of their product, but the fact is that building new high-voltage transmission lines costs hundreds of millions of dollars (at least) and the 100-foot-tall towers or underground pipes that carry them can be incredibly politically cumbersome to site and license. (Some cities, such as San Francisco and New York and their surrounding suburbs, are already so densely developed that it’s nearly impossible to lay any new incoming transmission lines at all.) So simply paying customers to ratchet down their ever-climbing demand by investing in energy-efficient appliances or flicking out the lights when they leave the house is a more viable option for some utilities than adding new capacity.

Network Solutions

That said, the reason, overall, that utilities have been reluctant to modernize their transmission capacity is that they don’t see a return on their investment. A study released after the blackout by the industry-sponsored Electric Power Research Institute set the price of investment needs at $100 billion. If this money comes from private utilities, they would recover costs through residential and commercial rate increases of about 10 percent. The question, of course, is: How would that money be spent? Many of EPRI’s funders are old-guard utilities that together generate 90 percent of the electricity used in the United States — and yet the organization is surprisingly savvy about the futility of the traditional system and the long-term importance of environmentally sound solutions.

And we’ll have sun, sun, sun …

Photo: NREL.

“We’re well aware that some of the existing technology puts us on a collision course with the environment,” said EPRI spokesperson Brent Barker, “and that in the long run this could be our biggest barrier to development. And we are aware that the future technology is going to become cleaner — that the most promising set of innovations in the energy industry leads us in the direction of improved efficiency and environmental performance.” The implication here is that industry is beginning to realize that the sooner it can incorporate nonpolluting technologies, the more prepared it will be to avoid inevitable penalties such as carbon taxes and stricter pollution standards down the line.

This is exciting, pie-in-the-sky talk, but it’s not exactly clear how much of it is translating into action. The image EPRI paints of the future electricity grid is not unlike what you hear in the prophesies of NRDC or the Rocky Mountain Institute: a smart energy network with a diversified portfolio of resources located closer to the point of use, pumping out low- or zero-emissions power in backyards, driveways, downscaled neighborhood power stations, and even fuel-cell cars, meaning consumers would actually live and drive in their own little power plants. The EPRI system would also have a centralized “brain” that would enable the appliances in every building to respond to the activity in the rest of the grid.

“Imagine an air conditioner that receives constantly updated market signals about the price of electricity on the grid and knows what the other air conditioners in the vicinity are doing,” said Barker. “By shedding demand when energy is expensive, or when less green power is available, such devices could lighten the load when the grid is in a state of stress.”

Cool, calm, and connected?

Now imagine if this model included other noncritical appliances: water heaters, fans, thermostats, and lighting in warehouses and malls. The grid could automatically make adjustments to those appliances so minor — increasing office air conditioning temperatures one or two degrees, say, or making overhead lights a shade dimmer — that they would be undetectable to users. But in a 40-story high-rise, such changes could save huge amounts of electricity.

It sounds great, but even EPRI will admit that, practically speaking, such a vision is a long way off. Though EPRI engineers are hard at work on smart-grid innovations, the emphasis has been more on the self-healing aspects (better sensors to predict and preempt line overload and to insulate against damage from terrorist attacks) than the “smart-meter” features that would communicate with consumer appliances and aid in grand-scheme energy efficiency. Needless to say, there is simply more funding for the former than the latter.

And yet, some of these efficiency technologies have been emerging (in rudimentary form, at least) on the market. In 2001, Puget Sound Energy in Washington state began offering residential customers discount power at night and on weekends (when demand is low) and installing smart meters in homes that offer price signals. In 2000, technology company Sage Systems introduced an Internet-based product called Aladn that allows customers to go online to monitor and adjust the energy consumption of home devices such as lights, kitchen appliances, and air conditioners, so they can save money on electricity bills during peak load times. Sage Systems also developed software for utilities that enables them to scale down load by setting back thousands of customers’ thermostats at once over the Internet. Such products are hardly mainstream at this point, but they are without a doubt feasible.

The Bonneville Power Administration, which provides nearly half of the electricity in the Pacific Northwest, has been developing a program called “Non-Construction Alternatives” aimed at helping eliminate the need to build new transmission lines. Brian Silverstein, BPA’s manager of network planning, has been working on pilot projects with major industrial customers to help them reduce demand during peak load times. He is also facilitating an ongoing roundtable discussion between all the different players — the utilities, environmentalists, manufacturers, technicians, and so forth — to learn more about how to make non-construction alternative projects work for everyone involved. “We are definitely blazing a new trail to reduce the need for wires as we look to applying demand reduction or distributed resources,” he said. “But still, we have to address this question called lost revenues. If utilities see lost volumes of sales when they look to a future of radical efficiency, needless to say, they will be less likely to deploy the upgrades.”

NRDC’s Cavanaugh, who is a consultant to the Bonneville project, has proposed that in the future utilities “decouple” the relationship between the quantity of electricity it takes to provide a service and the service itself. In other words, consumers would pay utilities for the fact that their lights stay on, rather than for the amount of electricity they use to keep the lights on. This, after all, is the way that Thomas Edison imagined it in the first place: His ambition was not to sell light bulbs or wires or electrons separately, but to integrate all technologies and services to sell illumination itself. If this had been FirstEnergy’s goal — and its revenue source — the company would have gone to every length in the days leading up to Aug. 14 to keep the lights on.