Are genetically modified organisms just too risky to mess with? For about a year, I’ve been mulling a paper that answers this question with a loud, clear “yes.” The paper wasn’t peer-reviewed or published in a scholarly journal, but I think it is worth examining because it helps explain a rational fear at the base of a lot of the opposition to GMOs.

The paper is a collaboration among five academics led by Nassim Nicholas Taleb — the risk analyst who became famous for predicting the economic crash of 2008 and for his bestselling books, including The Black Swan. The concept of a black swan is central to this particular paper: A black swan stands for the eventuality that people think can’t happen, but actually does happen on rare occasions. That is, people may say, there are no black swans, swans are white! But a swan, infrequently, has just the right combination of genes to produce black plumage.

Taleb has done admirable work detailing the simplistic and irrational ways the human mind tends to deal with such low-probability events. In this paper on GMOs, Taleb et al. are asking us to consider what to do about the possibility of genetic engineering leading to a black swan event. What if just the right combination of genes produces an organism that spreads out of control and leads to “total irreversible ruin, such as the extinction of human beings or all life on the planet”?

The authors define two different categories of hazards. In the first category are hazards with potential consequences that are locally constrained. For example, a nuclear power plant could melt down and cause harm, but mostly within its vicinity. This is not a systemic risk that could cause “irreversible ruin.” GMOs belong in the second category — hazards with the potential for triggering systemic collapse because they are self-replicating and not entirely under our control. The authors argue that, even if the chance of some apocalyptic worst-case scenario is infinitesimal, it’s worth taking seriously, because any odds greater than zero start to become significant when we roll the dice enough. Sooner or later the black swans appear. That may be tolerable if the destruction is local, not so fine if it completely extinguishes humanity.

So far, I’m on board with this argument. It’s a subtle way of dealing with unknown unknowns. We often don’t know about the dangers of a technology until after it hurts someone. But we can roughly approximate which technologies have the potential to cause global catastrophe and set them apart. The introduction of a new chemical always has the potential to cause unforeseen health problems, but it won’t kill everyone, and so it doesn’t fall into the category Taleb and his coauthors have defined as qualifying for a precautionary approach. On the other hand, you could imagine a scenario in which artificial intelligence found a way to evolve, self replicate, and take over the world — a global catastrophe, worthy of precaution. Even if it seems very unlikely, we can imagine how it might happen, e.g.:

For systemic hazards, which qualify for a precautionary approach, Taleb and friends write: “The potential harm is so substantial that everything else in the equation ceases to matter. In this case, we must do everything we can to avoid the catastrophe.” Which basically means, shut it all down. Avoid, they write, “at all costs.” And this is where they start to lose me.

My problem? If we shut down everything that falls in this potential-for-ruin category, we’re shutting down an awful lot of good stuff. And mediocre stuff. And just about everything with a pulse. It means shutting down all life. Anything with the ability to reproduce and spread and evolve contains the potential for ruin.

Anything that self-replicates could be ruinous

Now Taleb and the coauthors disagree on this: They say that natural life is different. Because evolution works from the bottom up, they write, it doesn’t contain the same potential for ruin as a top-down engineered seed. The idea is that natural reproduction and evolution is safe because, if something goes wrong, it will go wrong only locally and nature will stamp it out. They argue that the co-evolutionary process — the give and take between species in a local ecosystem — insures that disasters happen early, and at a small scale. The authors are saying that naturally occurring monsters with the potential to catastrophically destabilize the environment will wipe themselves out before they have the potential to cause global catastrophe.

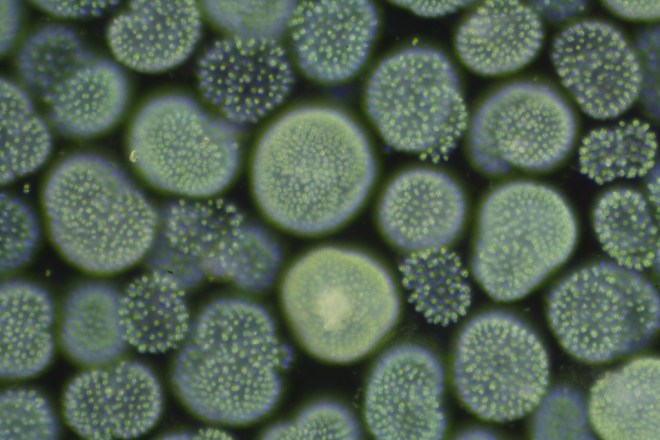

I think they’re dead wrong about this. Not only is it theoretically possible for natural, bottom-up tinkering to create global black-swan events, these events have already occurred. Some 2.5 billion years ago, nature created a new biological technology: It was a cellular device which converted sunlight into energy, but also produced a corrosive, highly flammable, and poisonous gas — oxygen. It thrived and spread and multiplied. The climate change resulting from all the oxygen produced triggered a mass extinction, which we now know this as the Great Oxygenation Event, or the Oxygen Holocaust. Then, there’s “the Great Dying” — the black swan event that wiped out 90 percent of all Earth’s species at the end of the Permian period. What caused it? The evolution of a new kind of microbe is a leading theory to explain this mass extinction.

Now, it’s absolutely true that the strategy of tinkering from the bottom-up sometimes keeps disasters local. For example, a disease might evolve to reproduce so successfully that it kills its host, wiping itself out. But, often, bottom-up tinkering does just the opposite: It strengthens organisms until they are fit to spread and multiply. Sometimes there’s no equivalent to the disease killing its host, and organisms keep thriving and spreading despite having radically destabilized their ecosystem.

People (myself included) tend to have a powerful intuition that nature is balanced and nurturing. And in a sense that’s true: Every fiber of my being was shaped to fit into this beautiful ecosystem that we know and love. But if you look back into deep time, my beloved ecosystem looks like a momentary blip. As biologist Daniel Botkin has pointed out, nature does not trend toward balance or stasis — it fluctuates, booms, and crashes.

Forget nature — what if humans just avoid potentially ruinous technology?

Seeds (of doom) at the Australian PlantBank.jerry dohnal

Even if we accept that nature contains the potential for ruin, we might argue that it would be a good idea to limit further risk by avoiding the technologies that contain the potential for ruin. It might not decrease our risk very much: Microbes in the soil and water are swapping genes between species and creating new organisms at a rate that would put a billion Monsantos to shame. But never mind — perhaps we are obliged to do all we can to reduce the risk of global catastrophe, even if we can only reduce it by a marginal amount.

If so, we should ban the engineering of transgenic organisms. At the same time, we’d also want to ban mutagenesis of seeds, cloning, tissue culture, marker-assisted breeding, and high-throughput genotyping. You see the problem here? Every form of breeding creates a potential for ruin. In fact, every time we plant a seed, we create a non-zero chance of unleashing a monster. But the alternative is starvation.

There are, of course, real differences between various forms of genetic engineering and other kinds of breeding, but they are not categorical differences. Any time we deal with something that is self-replicating, and self-supporting, and self-spreading, we are in the realm of potential for a systemic black swan. All forms of biological technology, up to and including subsistence farming, belong in the category of things with the potential to cause global ruin.

Surely some technologies are riskier than others

A ship is loaded with soybeans at Santos Port, Brazil.REUTERS/Paulo Whitaker

After you get past that categorical issue, we might still say: OK, even if all biology contains the potential for ruin, we should still compare and contrast and try to figure out which has more risk of causing systemic collapse. Absolutely. But now we are in a different realm: Instead of asking an elegantly simple categorical question, we are asking a down-and-dirty relative-risk question. It has shifted from: Avoid at all costs to let’s figure out if X is significantly riskier than Y.

Taleb and company wade into this a bit. They point out that genetically engineered seed is often globally distributed — it goes big and wide far faster than a species evolving in the wilderness would. I agree that the more we depend on just a few crops, the greater the potential for collapse (causing famine, not extinction). But this is really an argument for diversity and against bigness. We should take those problems on directly, rather than trying to get at them sideways through GMOs. Genetic engineering isn’t the cause of homogeneity or large-scale production. If we did away with GMOs, we’d still have top-down engineered seeds distributed across the globe each year. Modern production agriculture — both conventional and organic — is just a long, long way away from farmers moving in a slow, co-evolutionary relationship with their crops.

Here, the authors are hindered by a lack of expertise in agriculture and plant breeding. They try to pre-empt this critique by invoking what they call the carpenter fallacy: If we wanted to win at roulette, would we consult a probability expert, or a carpenter who makes roulette wheels? The former, certainly. But, as the economist Jason Lusk points out:

there is a huge assumption being made here: that the probability expert knows how the wheel is made — how many possible numbers there are, whether the wheel is balanced, whether the slots in the wheel are of equal size, etc., etc. In short, a good probability theorist needs to know everything the carpenter knows and more.

Now, a probability expert who doesn’t fully understand how roulette works can still point out that we should stay away from the table in the corner, because it destroys the world every time the ball lands on 12. But if every roulette wheel (not just the one in the corner) generates a small probability of ruin, and we have to play or starve, then we really do need a probability expert who also understands every detail of roulette wheel construction. That is, if there isn’t a clear, categorical distinction between our technological choices, we have to turn back to the imprecise business of weighing relative risks, which requires the expertise of both biologists and risk analysts.

Here’s why this matters

To recap: I think the authors are basically right to say that anything that could cause “global ruin” is worth treating with caution. They are also right to note that GMOs are categorically different from many other technologies — like chemicals, or nuclear energy — because we put them outside, their pollen flies, and they self replicate. And that’s the key point: The fear of GMOs leading to global ruin isn’t crazy. It is a rational fear.

This may be why so many conversations on GMOs go nowhere. Lurking in the background of the debate is a fear that genetic engineering will create a rogue organism that will somehow destroy the world as we know it. Experts tend to dismiss this concern. Instead they should acknowledge that this is a rational assessment of risk. It’s just that this risk isn’t unique; it’s a basic, unavoidable fact of our daily lives, with or without gene-modification technology.

There’s a quirk in the way we think about risk: We are acutely sensitive to the potential for new risks, but numbed to the risks we live with every day. When we hear about the risks of some new seed-breeding technology, the potential for ruin looms large. People with knowledge of the technology then try to mollify our fears by saying, “Hey, don’t worry, the chances are low.” This isn’t reassuring. The layman quite rationally responds, “I don’t care if the chances are low, I don’t want to take any chances!”

At that point, communication breaks down. The laymen are reacting to the categorical potential for ruin that Taleb et al. identify, which makes the technical details about relative risk seem irrelevant and irrational. But perhaps — just perhaps — the conversation might advance if the experts conceded that genetic engineering really does contain the risk of ruin, and then helped lay people see that every other option, including the status quo, should evoke the same fear.

If we are able to see the risks of the status quo, the GMO issue stops looking like the introduction of something potentially ruinous into Eden. Instead, all of agriculture begins to look like a series of choices between various types of potentially ruinous technology. It would be hard — perhaps impossible — to take in the uncomfortable fact that humanity is dicing with ruin on a daily basis. But accepting this reality also opens up exciting possibilities: Instead of tiptoeing around Nature as if it were a statue in a museum, we could take it into our arms and dance. We may be fools dancing on the edge of the precipice, but once we’ve accepted that inevitability, we might as well dance.