Climate change is a serious security risk to the United States — the Department of Defense, the Joint Chiefs of Staff, and the White House have affirmed as much in various reports and proclamations. It’s become a popular talking point among climate hawks. Nonetheless, there hasn’t been enough thinking, at least outside nerd circles, about what it would it mean to approach climate change as a security problem. What exactly would that look like?

Typically, economists and policymakers have viewed climate change through the lens of cost-benefit analysis, where the goal is efficiency, the right balance between pollution reduction and economic growth. The idea is to determine the cost that a ton of carbon imposes on society and price that cost into markets. Voilà: the optimal solution.

Unfortunately, climate change is subject to a degree of uncertainty that renders any such attempt at optimization arbitrary and brittle. Decisionmaking under deep uncertainty calls for an emphasis on robustness and resilience — plans that can hold up reasonably well under a wide variety of possible outcomes.

It’s important to realize, however, that climate change is not unique in this regard. We make decisions in the face of deep uncertainty all the time: in finance, in resource management, in infrastructure planning. In particular, national security decisions are often made on the basis of incomplete, unreliable, or shifting information. If you think planning for climate change is bad, try making decisions today based on China’s military posture in 2050. Now that’s uncertain! Nuclear proliferation, terrorism, disease epidemics … they all require taking action in a fog.

Obviously, many national security decisions have turned out to be awful. Nonetheless, over time, there has been a great deal of learning about how to operate under uncertainty. The approach used in national security circles falls under the rubric of risk management. How would it look applied to climate change? That, as it happens, is the subject of a fantastic report from green think tank E3G: “Degrees of Risk: Defining a Risk Management Framework for Climate Security.” It came out in early 2011, but somehow I missed it at the time (thanks to ace energy blogger Adam Siegel for bringing it to my attention). I shall attempt to convey the gist of it, but I highly recommend reading at least the short executive summary [PDF].

First, what is risk? In this framework, it means probability times severity. In other words, the risk of X is the probability of X happening multiplied by the severity of X should it occur. It follows that risk can be meliorated in two ways: reducing probability and reducing severity.

Humans have an unfortunate tendency to dismiss risks when they are uncertain — to confuse uncertain risk with low risk — which turns out to be an exceedingly poor strategy, especially in relation to long-term risks. In fact, greater uncertainty can mean greater risk. One of the authors of the report, Jay Gulledge, illustrates this point with a graph in a separate presentation [PDF]:

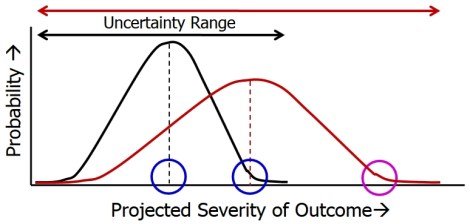

So this is a basic risk matrix: on the vertical axis you have probability, on the horizontal axis, severity. The two lines are risk assessments. As you can see, the black line is narrowly centered around a particular severity, while the red line sprawls across a wide range of severities — in other words, there’s more uncertainty about the red scenario. Nonetheless, even though the red scenario is not as probable as the black scenario, it has long risk “tails,” including the one on the right that contains extremely severe outcomes. It contains more risk.

When it comes to risk management, uncertainty is not reason to delay planning, it is information that informs planning.

So, why should we prefer the risk management approach? Pardon me a long excerpt from the report:

Risk management is a practical process that provides a basis for decision makers to compare different policy choices. It considers the likely human and financial costs and benefits of investing in prevention, adaptation and contingency planning responses. Some risks it is not cost effective to try and reduce, just as there are some potential impacts to which we cannot feasibly adapt to while retaining current levels of development and security.

Risk management approaches do not claim to provide absolute answers but depend on the values, interests and perceptions of specific decision makers. Risk management is as much about who manages a risk as it is about the scientific measurement of a risk itself. The Maldives will have a different risk management strategy to Russia; Indian farmers will see the balance of climate risks differently from the Indian steel industry.

This is crucial: the point is not to find the “right” or optimal policy, but to provide a framework that allows comparisons between policies, a framework that incorporates both science and the “values, interests and perceptions” of local decisionmakers. The framework won’t settle the disputes, it will simply clarify them:

Legitimate differences in risk management strategies will form much of the ongoing substance of climate change politics. All societies continually run public debates on similar existential issues: the balance of nuclear deterrence vs. disarmament, civil liberties vs. anti-terrorism legislation, international intervention vs. isolationism. Decisions are constantly made even when significant differences remain over the right balance of action. Political leadership has always been a pre-requisite in the pursuit of national security. We should expect the politics of climate change to follow similar patterns.

Implementing an explicit risk management approach is not a panacea that can eliminate the politics of climate change, either within or between countries. However, it does provide a way to frame these debates around a careful consideration of all the available information, and in a way that helps create greater understanding between different actors.

With that broad-brush introduction, let’s take a quick look at how it would apply to climate change.

Getting back to our definition of risk: the risk of dangerous climate change equals the probability that it will occur multiplied by the severity of its social and economic impacts. Attempts to reduce the probability are known as mitigation. Attempts to make social and economic systems more resilient and thus reduce the severity of climate impacts are known as adaptation. Mitigation and adaptation are both tools to reduce risk.

One important feature of climate projections tends to be invisible from the cost-benefit point of view: the presence of “long-tail risks,” the low-probability, high-impact stuff out on the end of the probability curve. Think “temperature rises by 6 degrees, ice sheets collapse, seas rise by 20 meters, drought covers the globe, remaining humans run feral through a post-apocalyptic hellscape.”

We would really, really like to avoid that kind of thing. But if we’re just optimizing toward the average projection, we’ll take no account of those tails. Risk management calls for them to be incorporated.

I’m going to put another of Gulledge’s graphs below to illustrate a point. It may make your eyes bleed, but take a deep breath.

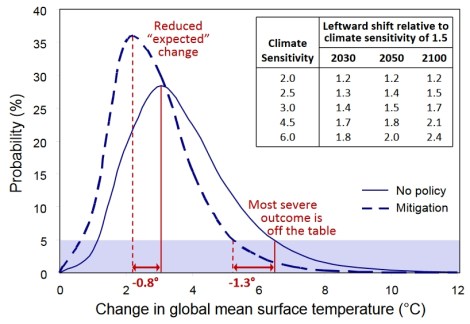

The solid blue line is the range of projections for climate change without mitigation (don’t worry about the specific numbers; it’s meant to be illustrative). As you can see, over on the right, that blue line has a long tail of risks that are fairly low probability (5 percent or less) but extremely dangerous, with average temperatures of over 6 degrees, which would be apocalyptic. The dotted blue line is the same range of projections incorporating steady mitigation of around 1 percent a year (again, the specific numbers don’t matter).

The key thing to notice: Mitigation reduces the expected change — the top point of the probability curve — 0.8 degrees, but it reduces the long-tail scenarios by 1.3 degrees. In other words, the minute you start mitigating, you start disproportionately taking the worst, scariest risks off the table.

This is a crucial benefit of mitigation that rarely shows up in our policy debates, because it doesn’t show up in risk-benefit optimizing. But eliminating or reducing those long-tail risks ought to matter. It ought to count as “added value” in our mitigation plans.

Another crucial aspect of uncertainty that gets overlooked: Even the most ambitious climate plans currently on the table offer only offer a chance of limiting global temperature rise to 2 degrees (the widely agreed threshold of safety). But if a mitigation plan offers a 50 percent chance of hitting the 2 degree target, that means there’s a 50 percent chance of going higher. So even if you’re wildly aggressive on mitigation, you can’t put all your adaptation eggs in the 2 degree basket. You have to plan for the possibility of higher temperatures.

With all that said: Here is what the authors propose as a climate change risk management framework, in handy graphic form:

The details in the right-hand column are mostly self-explanatory, though they are explored at greater length in the report. The main takeaways are on the left side: prudent risk management suggests that we should try to hit 2 degrees, build and ruggedize as though we will hit 3-4 degrees, and develop contingency plans for the catastrophic 5-7 degree scenarios.

The important thing is not the specific numbers. It may be that science will develop (or our risk tolerance will increase or decrease) and we’ll tweak them up or down. It may be that different communities will estimate the risks differently. The important thing is the framework itself, which creates a common space for divergent worldviews and perspectives. This isn’t another clash of “believers vs. skeptics.” It’s about how much risk you’re willing to take on and what risks are worth hedging against. The framework won’t end political debates — there’s no “science says to do XYZ” here — but it will allow us to clarify where, and over what, we genuinely differ.