This story was originally published by Mother Jones and is reproduced here as part of the Climate Desk collaboration.

Signs of human impact on the planet are everywhere. Sea levels are rising as ice at both poles melts; plastic waste clogs the ocean; urban sprawl paves over landscapes while industrial agricultural empties aquifers. Between climate change, urban development, and straight-up, old-school pollution, the Earth we inhabit now would be scarcely recognizable to our earliest ancestors 150,000 year ago.

In fact, these changes are so pronounced, and their connection to human activity so obvious, that many scientists now believe we’ve already ventured well past a remarkable tipping point — Homo sapiens, they argue, have now surpassed nature as the dominant force shaping the Earth’s landscapes, atmosphere, and other living things. Units of deep geologic time often are defined by their dominant species: 400 million years ago fish owned the Devonian Period; 265 million years ago dinosaurs ruled the Mesozoic Era. Today, humans dominate the Anthropocene.

If defining what the Anthropocene represents is straightforward, assigning it a commencement date has proven a monumental challenge. The term was first proposed by Russian geologist Aleksei Pavlov in 1922, and since then it has occupied off-and-on the attentions of the niche group of scientists whose job it is to decide how to slice our planet’s 4.5 billion-year history into manageable chunks. But in 2009, as climate change increasingly gained traction as a matter of public interest, the idea of actually making a formal designation started to appear in talks and papers. Today, if the scientific literature is any indication, the debate is fully ignited.

In fact, “it has been open season on the Anthropocene,” said Jan Zalasiewicz, a University of Leicester paleogeologist who is a leading voice in the debate. Within the last month a heap of new papers have come out with competing views on whether the Anthropocene is worthy of a formal designation, and if so, when exactly it began. The latest was published in Science recently.

In some cases, geologic time periods are demarcated by a mass extinction or catastrophic natural disaster (the meteor that likely killed off the dinosaurs being the classic example). In other cases it can be the emergence of an important new group of species. But either way, Zalasiewicz explained, it has to be something that is readily identifiable in the global fossil record. That’s what makes the Anthropocene so difficult to nail down. We’re leaving behind plenty of fossils: Not just our bones, but those of animals and plants we’ve relocated across the globe, not to mention the chemicals and gases we leave in the atmosphere and soil, and all of our tools, buildings, and other physical objects.

But it’s hard to say, without the benefit of thousands or millions of years of hindsight, which one key signal in the geologic record marks the point when humans went from just another species to the predominant force on the planet.

In January, Zalasiewicz proposed a specific date: July 16, 1945, when the first nuclear bomb was detonated in Alamogordo, N.M. Zalasiewicz readily acknowledges that humans began to have an impact on the planet well before then. But that blast and the thousands that followed it, he argues, left behind a residue of radioactive elements that serve as the “least worst” indicator in the geologic record of global-scale human interference.

In March, another group of geologists in the United Kingdom proposed a similar timeframe, but for a different reason. The 1950s, they argued, was the first time when carbon ash particles characteristic of widespread fossil fuel combustion began to appear in soil records.

Meanwhile, yet another paper advocated pushing the date back a few hundred years. These researchers pointed to a marked drop in atmospheric carbon dioxide in the mid-1600s, known as the Orbis spike. At that time, widespread disease ballooned in the wake of European emigration to the Americas and decimated the human population to a point where forests rebounded enough that records of carbon in the geologic record changed more profoundly than anytime in the preceding 2,000 years.

But even that doesn’t go back nearly far enough, according to the most recent research. William Ruddiman, a paleoclimatologist at the University of Virginia, thinks we should look for the start of the Anthropocene all the way back when humans first started to cultivate crops, up to 11,000 years ago.

“How can you define the Anthropocene as beginning in 1945 when most of the forests had been cut and most of the prairies had been plowed and planted?” Ruddiman said. “It just seems senseless to define the era of major human effects on Earth as beginning when huge chunks of that history had already happened.”

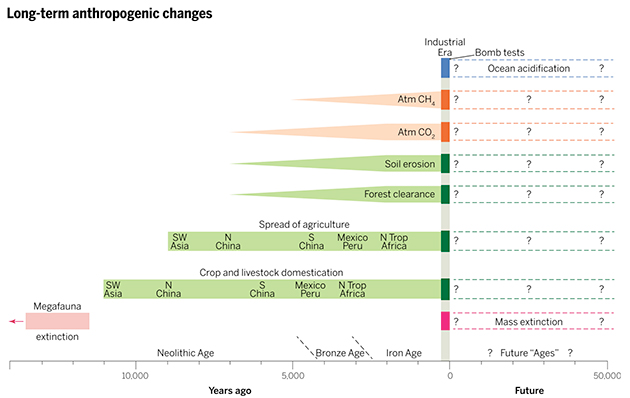

Ruddiman makes a compelling point: Take a look at this chart from his paper, which shows that humans began to dramatically change their environment many thousands of years before anyone figured out how to power a factory by burning coal:

Mother Jones/Ruddiman et al., Science 2015

Ruddiman is quick to point out a serious caveat with this argument. Unlike the Industrial Revolution or the atomic bomb, which made their impact on a global scale in — geologically speaking — the blink of an eye, agriculture crept slowly across the planet. Zalasiewicz agrees: “Because farming took many thousands of years, that horizon is very differently across the world. So it cannot be a geological time unit.”

Is defining the Anthropocene just a question of semantics, the answer to which will matter only to a tiny clique of geology nerds? Ruddiman thinks so. But Zalasiewicz says that at a time when humans’ unprecedented impact on the planet has become a matter of intense scrutiny by politicians, businesses, and the public at large, putting a formal moniker on this time period could be a useful framework in which to understand our actions — and possibly change them.

In any case, paleoclimatologists seem to agree that the same scientific methods used to identify periods in deep history may be poorly equipped to wrangle with a period we’re living through now.

“We should hold off and wait a thousand years,” Ruddiman said. “And then make a decision.”