This is the first installment in Grist’s Feeding the City series, which we’ll be running over the course of the next several weeks. (Learn more.)

The main building of the groundbreaking urban farm Growing Power in Milwaukee, Wis.Photo: OrganicNation via Flickr

The main building of the groundbreaking urban farm Growing Power in Milwaukee, Wis.Photo: OrganicNation via Flickr

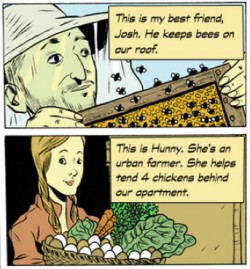

Grist’s new comic series, My Intentional Life, features food-growing hipsters.“Few things scream ‘Hipster’ like an apartment garden.” Thus spake the New York City music magazine Death + Taxes, and it’s easy to see why. In trendy neighborhoods from Williamsburg, Brooklyn, to San Francisco’s Mission district, urban youth are nurturing vegetables in window sills, fire escapes, and roofs. Down on the street, they tend flourishing garden plots, often including chickens and bees. Even Grist has launched a comic strip (left) devoted to the exploits of urban-hipster homesteaders.

Grist’s new comic series, My Intentional Life, features food-growing hipsters.“Few things scream ‘Hipster’ like an apartment garden.” Thus spake the New York City music magazine Death + Taxes, and it’s easy to see why. In trendy neighborhoods from Williamsburg, Brooklyn, to San Francisco’s Mission district, urban youth are nurturing vegetables in window sills, fire escapes, and roofs. Down on the street, they tend flourishing garden plots, often including chickens and bees. Even Grist has launched a comic strip (left) devoted to the exploits of urban-hipster homesteaders.

But growing food in the city isn’t just the province of privileged youth — in fact, the recent craze for urban agriculture started in decidedly unhip neighborhoods. Nor is it anything new. As I’ll show in this rambling-garden-walk of an essay, urban agriculture likely dates to the birth of cities. And its revival might just be the key to sustainable cities of the future.

Gardens sprout where factories once thrived

Like jazz, soul, and hip-hop, the recent revival of city agriculture started in economically marginal areas before taking hipsters by storm. Unfashionable neighborhoods hit hard by post-war suburbanization and the collapse of U.S. manufacturing together proved to be fertile ground for the garden renaissance.

Across the country, the postwar urban manufacturing base began to melt away in the 1970s, as factories fled to the union-hostile South, and later to Mexico and Asia. Meanwhile, the highway system and new development drew millions of white families to the leafy lawns of the exurban periphery.

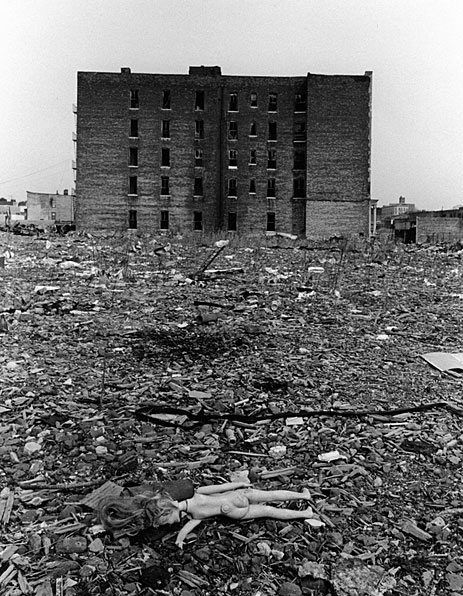

After a wave of arson in the 1970s, this building was the last left standing in the block of East 173rd through 174th Street in the South Bronx.Photo: Mel Rosenthal of Duke University LibrariesAs a result of high unemployment and plunging occupancy rates, inner-city rents fell, and many landlords suddenly owed more in property taxes than they were making in rent. Too often, the “solution” was eviction, arson, and a fast-and-dirty insurance settlement. A 1977 Time article documented the phenomenon:

After a wave of arson in the 1970s, this building was the last left standing in the block of East 173rd through 174th Street in the South Bronx.Photo: Mel Rosenthal of Duke University LibrariesAs a result of high unemployment and plunging occupancy rates, inner-city rents fell, and many landlords suddenly owed more in property taxes than they were making in rent. Too often, the “solution” was eviction, arson, and a fast-and-dirty insurance settlement. A 1977 Time article documented the phenomenon:

In ghetto areas like the South Bronx and [Chicago’s] Humboldt Park, landlords often see arson as a way of profitably liquidating otherwise unprofitable assets. The usual strategy: drive out tenants by cutting off the heat or water; make sure the fire insurance is paid up; call in a torch. In effect, says [then-New York City Deputy Chief Fire Marshal John] Barracato, the landlord or businessman “literally sells his building back to the insurance company because there is nobody else who will buy it.” Barracato’s office is currently investigating a case in which a Brooklyn building insured for $200,000 went up in flames six minutes before its insurance policy expired.

In addition to New York City and Chicago, Time identified Detroit, Boston, and San Francisco as major sites of the arson plague. It was from its ashes that the modern-day community-garden movement sprung. In hollowed-out neighborhoods, residents got busy cleaning up newly vacant lots, hauling out debris, and bringing in topsoil and seeds. As Laura J. Lawson shows in her 2005 book City Bountiful: A Century of Community Gardening in America, fresh food was only one of the goods that neighborhoods harvested from gardens. “Gardening,” she writes, “became a venue for community organizing intended to counter inflation, environmental troubles, and urban decline.”

Celebrated as innovators, the market gardeners of today’s Milwaukee, Detroit, and Baltimore are actually restoring age-old traditions. It is the gardenless city — metropolises like Las Vegas or Phoenix that import the bulk of their food from outside their boundaries — that is novel and experimental.

By the 1990s, urban farming was beginning to take root. In 1993, a former professional basketball player and corporate marketer named Will Allen purchased a tract of land on the economically troubled North Side of Milwaukee, Wis. Allen hoped to use the space to open a market that would sell vegetables he grew on his farm outside Milwaukee. But then, working with unemployed youth from the city’s largest housing project, nearby Westlawn Homes, Allen soon began growing food right in Milwaukee.

Eventually, that effort would morph into Growing Power, now the nation’s most celebrated urban-farming project, and the reason Allen won a Macarthur “genius grant.” Similar initiatives cropped up in low-income neighborhoods in Brooklyn (East New York Farm and Added Value), Boston (the Food Project), and Oakland (City Slicker Farms).

Breaking down the false urban-rural divide

Post-industrial inner-city neighborhoods may have sprouted the current urban-ag craze, but the act of growing food amid dense settlements dates to the very origin of cities. In her classic book The Economy of Cities, urban theorist Jane Jacobs argues convincingly that agriculture likely began in dense tool-making and trading settlements that evolved into cities.

In that book, Jacobs exploded the long-held assumption that people first established agriculture, then established cities — the “myth of agricultural primacy,” as she calls it. Jacobs shows that in pre-historic Europe and the Near East, pre-agricultural settlements of hunters have been identified, some of them quite dense in population. As the settled people began to exploit resources like obsidian to create tools for hunting, a robust trade between settlements began to flow.

Eventually, edible wild seeds and animals joined obsidian and tools as tradeable commodities within settlements, and the long process of animal and seed domestication began, right within the boundaries of these proto-cities. And when organized agriculture began to flourish, cities grew dramatically, both in population and complexity. Eventually, some (but not all) agriculture work migrated to land surrounding the emerging cities — and the urban/rural divide was born. (More rigorous scholarship, especially that of the Danish economist Ester Boserup, confirms that dense settlements preceded agriculture.)

As

Jacobs shows, cities and agriculture co-evolved, and the concept of “rural” emerged from them:

Both in the past and today, the separation commonly made, dividing city commerce and industry from rural agriculture, is artificial and imaginary. The two do not come down through two lines of descent. Rural work — whether that work is manufacturing brassieres or growing food — is city work transplanted.

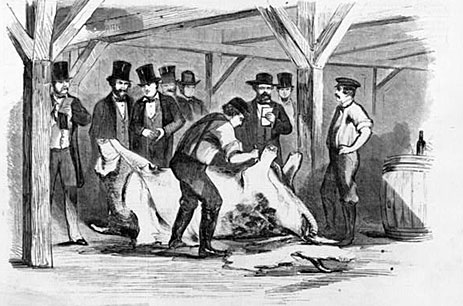

Detail of an engraving from a 1858 newspaper showing a scene at New York City’s ’Offal Dock,’ in which a diseased dairy cow from the 16th St. swill-cow stables is being dissected. Photo: Library of CongressAnd much agricultural work remained within cities over the millennia. In 19th-century New York City, dairy farming proliferated (if didn’t exactly thrive), as shown by this passage from Nature’s Perfect Food: How Milk Became America’s Drink, by UC Santa Cruz sociologist and food-studies scholar E. Melanie Dupuis:

Detail of an engraving from a 1858 newspaper showing a scene at New York City’s ’Offal Dock,’ in which a diseased dairy cow from the 16th St. swill-cow stables is being dissected. Photo: Library of CongressAnd much agricultural work remained within cities over the millennia. In 19th-century New York City, dairy farming proliferated (if didn’t exactly thrive), as shown by this passage from Nature’s Perfect Food: How Milk Became America’s Drink, by UC Santa Cruz sociologist and food-studies scholar E. Melanie Dupuis:

By the mid-19th century, “swill” milk stables attached the the numerous in-city breweries and distilleries provided [New York City] with most of its milk. There, cows ate the brewers grain mush that remained after distillation and fermentation … As many as two thousand cows were located in one stable. According to one contemporary account, the visitor to one of these barns “will nose the dairy a mile off … Inside, he will see numerous low, flat pens, in which more than 500 milch cows owned by different persons are closely huddled together amid confined air and the stench of their own excrements.”

This was the dawn of fresh milk as a mass-produced, mass-consumed commodity, and the filthy conditions in these urban feedlots produced what Dupuis calls “an incredibly dangerous food … a thin, bluish fluid, ridden with bacteria yet often called ‘country milk.'” In line with Jacobs’ argument that rural work is “city work transplanted,” here we find evidence that today’s concentrated-animal feedlot operation, or CAFO, originated in cities before migrating to rural areas, where CAFOs are found today. (Of course, it was a chemist residing in the French city of Strasbourg, Louis Pasteur, who came up with the technology for making CAFO milk safe to drink … more or less.)

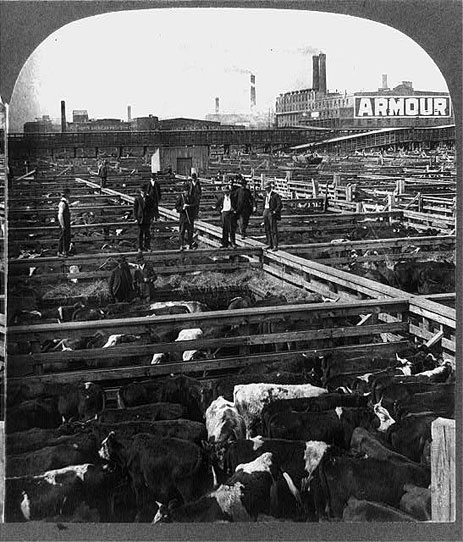

The Chicago stockyards circa 1909.Photo: Kelley & ChadwickAn even stronger model for today’s CAFOs can be found in Chicago’s notorious stockyards, which didn’t close for good until 1971. Meatpacking is now an exclusively rural industry, clustered more or less out of sight in remote counties of Iowa, North Carolina, and Arkansas. But for nearly a century starting in the mid-1800s, farmers from Chicago’s hinterland would haul their cows to the city’s vast slaughterhouses, where they would be fed in pens while awaiting their fate. According to the Chicago Historical Society, by 1900 the stockyards “employed more than 25,000 people and produced 82 percent of the meat consumed in the United States.” Famous meat-packing giants like Armour and Swift got their start there.

The Chicago stockyards circa 1909.Photo: Kelley & ChadwickAn even stronger model for today’s CAFOs can be found in Chicago’s notorious stockyards, which didn’t close for good until 1971. Meatpacking is now an exclusively rural industry, clustered more or less out of sight in remote counties of Iowa, North Carolina, and Arkansas. But for nearly a century starting in the mid-1800s, farmers from Chicago’s hinterland would haul their cows to the city’s vast slaughterhouses, where they would be fed in pens while awaiting their fate. According to the Chicago Historical Society, by 1900 the stockyards “employed more than 25,000 people and produced 82 percent of the meat consumed in the United States.” Famous meat-packing giants like Armour and Swift got their start there.

Cities didn’t just innovate techniques that would later become associated with large-scale, chemical-dependent agriculture, they also incubated sustainable ones. The so-called “French-intensive” method of growing vegetables — in which large amounts of compost are added annually to densely planted raised beds — is one of the most productive and sustainable forms of organic agriculture used today. And guess what? It developed not in the countryside, but rather within the crowded arrondissements of 19th century Paris. Maine farmer Eliot Coleman, one of the leading U.S. practitioners of the French-intensive style, credits those pioneering Parisian farmers with ingenious methods of extending growing seasons that are only just now coming into widespread use in the United States.

A modern-day community garden in Parc de Bercy, Paris, employs centuries-old intensive growing techniques.Photo: Cara Ruppert via FlickrThe French-intensive method hinges on a principle identified by Jane Jacobs, one that modern-day city residents (and planners) should take to heart: that cities are fantastic reservoirs of waste resources waiting to be “mined.”

A modern-day community garden in Parc de Bercy, Paris, employs centuries-old intensive growing techniques.Photo: Cara Ruppert via FlickrThe French-intensive method hinges on a principle identified by Jane Jacobs, one that modern-day city residents (and planners) should take to heart: that cities are fantastic reservoirs of waste resources waiting to be “mined.”

Like all cities of its time, 19th-century Paris bristled with horses, the main transportation vehicle of the age. And where there are lots of horses, there are vast piles of horseshit. The city’s market gardeners turned that fetid problem into a precious resource by composting it for food production.

“This recycling of the ‘transportation wastes’ of the day was so successful and so extensive that the soil increased in fertility from year to year despite the high level of production,” Coleman writes. He adds that Paris’s market gardeners supplied the entire metropolis with vegetables for most of the year — and even had excess to export to England.

Of arugula, compost, and the city of the future

What I’m driving at is this: urban agriculture seems new and exotic, but it has actually been the norm since the dawn of farming 10,000 years ago. Celebrated as innovators, the market gardeners of today’s Milwaukee, Detroit, and Baltimore are actually restoring age-old traditions. It is the gardenless city — metropolises like Las Vegas or Phoenix that import the bulk of their food from outside their boundaries — that is novel and experimental.

And that experiment was engendered by the force that has transformed our food system over the past 100 years: the rise of chemical-intensive, industrial-scale agriculture. The easy fertility provided by synthetic nitrogen fertilizer (an innovation engineered by the decidedly urban German chemical conglomerate BASF in the runup to World War I) made the kind of nutrient recycling performed by Paris’s urban farmers seem obsolete and backwards. Meanwhile, the rise of fossil fuel-powered transportation banished the horse from cities, taking away a key source of nutrients.

The industrial-ag revolution led to regional specialization and stunning concentration. Most fruit and vegetables we consume now come from a few areas of California and Mexico; grain is grown almost exclusively in the Midwest; dairy farming happens mainly in California and Wisconsin. These wares are shipped across the continent in petroleum-burning trucks. After World War II — during which town and city gardens provided some 40 percent of vegetables consumed in the United States — city residents no longer needed to garden for their sustenance. Food production became a “low value,” marginal urban enterprise, and planners banished it from their schemes. Supermarkets, stocked year-round with produce from around the world and a wealth of processed food, more than filled the void.

Fueled by fossil energy, cities could afford to banish agriculture completely to the hinterlands. For the first time in history, a clean urban/rural divide opened.

But c

onditions are changing rapidly; the great experiment of the city as pure food consumer may yet prove a failure. In low-income areas of cities nationwide, supermarkets have pulled out, leaving residents with the slim, low-quality, pricey offerings of corner stores. The easy availability of fossil fuels, on which the food security of cities has relied for decades, is petering out, and the consequences of burning them are building up.

Meanwhile, titanic amounts of the food that enters cities each year leaves as garbage entombed in plastic and headed to the landfill — a massive waste of a resource that could be composted into rich soil amendments, as Paris’ 19th-century farmers did with horse manure.

According to the EPA, fully one-quarter of the food bought in America ends up in the waste stream — 32 million tons per year. Of that, less than 3 percent gets composted. (An upcoming slideshow in the Feeding the Cities series will show you some easy ways to do so, even if you live in a studio apartment.) The rest ends up in landfills, where it slowly rots, emitting methane, a greenhouse gas 21 times more potent than carbon dioxide. The EPA reports that wasted food in landfills accounts for a fifth of U.S. methane emissions: the second largest human-related source of methane in the United States.

Imagine the positive ripples that would occur if city governments endeavored to compost that waste as a city service, and distributed the products to urban gardeners.

Milwaukee “genius” farmer Will Allen atop one of Growing Power’s immense — and immensely profitable — compost piles.Photo: Growing PowerIn this regard, policy has fallen behind grassroots action on the ground. Milwaukee’s Growing Power has turned the urban waste stream into a powerful engine for growing food. Most urban agriculture operations today are net importers of soil fertility — they bring in topsoil and compost from outside to amend poor urban soils. Growing Power has become a net exporter. In 2008, as the New York Times Magazine reported in a profile of founder Will Allen, Growing Power converted 6 million pounds of spoiled food into 300,000 pounds of compost. The organization used a quarter of it to grow enough food to feed 10,000 Milwaukee residents — and sold the rest to city gardeners.

Milwaukee “genius” farmer Will Allen atop one of Growing Power’s immense — and immensely profitable — compost piles.Photo: Growing PowerIn this regard, policy has fallen behind grassroots action on the ground. Milwaukee’s Growing Power has turned the urban waste stream into a powerful engine for growing food. Most urban agriculture operations today are net importers of soil fertility — they bring in topsoil and compost from outside to amend poor urban soils. Growing Power has become a net exporter. In 2008, as the New York Times Magazine reported in a profile of founder Will Allen, Growing Power converted 6 million pounds of spoiled food into 300,000 pounds of compost. The organization used a quarter of it to grow enough food to feed 10,000 Milwaukee residents — and sold the rest to city gardeners.

Growing Power represents a throwback, both to African-American farming traditions of Allen’s youth and to the urban-farming techniques of 19th-century France, which Allen cites as an inspiration. It also represents the vanguard of a new “New Urbanist” movement that sees food production as a vital engine of city development, as Daniel Nairn wrote about here a few weeks ago, and will describe in more detail in a forthcoming essay for this series.

As the ravages of fossil fuel use and abuse pile up, the urban/rural rift that opened up a century ago may need to close.

It’s important not to overstate the case here. Today’s dense cities could not exist without highly productive rural areas providing the bulk of food. Not even the most committed Brooklyn market gardener dreams of supplying the metropolis with flour from wheat grown on French-intensive plots. Growing grain in the city makes no sense, and no one wants to see cows grazing on Central Park’s Great Lawn. Any realistic vision of “green cities” sees them as consumption hubs within larger regional foodsheds.

But cities need not, and indeed likely cannot, continue as pure consumers of food and producers of waste. Intensive production of perishable vegetables, fertilized by composted food waste, can bring fresh produce to food deserts, provide jobs as well as opportunities for community organizing, and also shrink a city’s ecological footprint.

Urban gardening may “scream ‘Hipster”’ — but it may also bloom into a way forward for those who want to build sustainable cities of the future.